Pypy 2.3.1 versus cPython 2.7.6 on very large builds

A good build practice is to keep the count of build tasks to an absolute minimum. It implies fewer objects to process (reduced pressure on the Python interpreter), less data to store (data serialization), and fewer processes to spawn (reduced pressure on the OS). If is therefore a good idea to enable batches if the compiler supports them (waflib/extras/unity.py and waflib/extras/batches_cc.py for example).

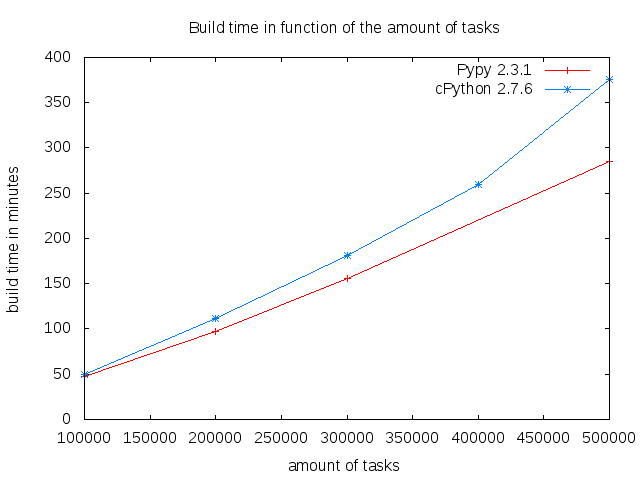

Although very large builds should be uncommon, it can be interesting to consider how the Python interpreter behaves at the limits. Here is for example a few results on playground/compress for a large amount of tasks:

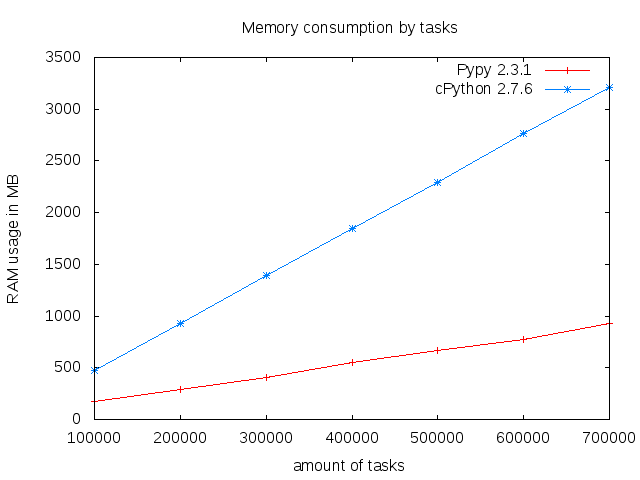

The runtime difference between cPython and Pypy becomes noticeable at approximately 100K tasks (1 minute). It then stretches to about 90 minutes for 500K tasks. One explanation for these figures can be found in the memory usage:

Since the Pypy interpreter requires much less memory than cPython, it is more likely to remain efficient with a high number of objects.