Stop blaming the Chinese, the web is broken

Github was under attack at the same time as we were deciding to move the Waf project to it (informal poll results). The source of this particular attack is though to come from China, and such "cyber" problems are gradually taking a political dimension.

Yet, one may wonder why attacks can happen in the first place. Why is the web that fragile? Some suggest to encrypt traffic as a measure to mitigate the problem. Such a mitigation would only work if all sites and all users used mainly https.

A much more realistic solution for this particular issue could be to have web browsers disallow background requests to external sites unless the URLs is explicitly meant for that purpose. For example, if such requests were only permitted to domain names containing keywords such as "tracker" tracker.site.domain or "api" api.site.domain, then such attacks would then be prevented by design. The only drawback would be for advertisers as requests would become a little too easy to filter. We can bet that the idea will be rejected for backward compatibility reasons.

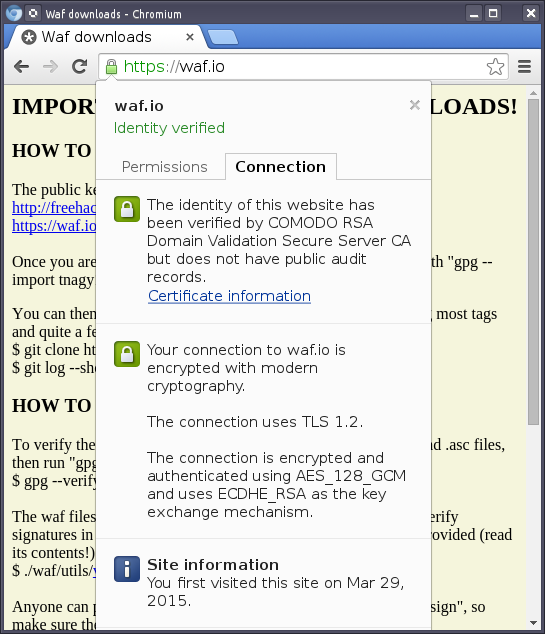

The Waf site is using https at least and the new padlock in the URL bar definitely looks nice. The confidentiality benefits are actually lower than we would like to believe though. Certificate authorities have been found to provide certificates to impersonate sites for a long time. Sometimes the matter becomes apparent, and finger starts pointing at people again.

Now, such basic impersonation would be easy to detect if for example site fingerprints were readily accessible instead of being buried behind layers of guis in web browsers. Certificate authorities would be less tempted to allow fake certificates to be created:

But even with easier access to the fingerprints and even with certificate pinning, the whole certificate system should be considered as already broken from its very core: trust only works when several crossing eyes are involved. It is well known that sensitive operations in banks require two people to open a blinded door for example. This principle has also been rediscovered in airplanes as well; they may require two people in the cockpit at all times.

A more robust scheme for the whole web would be to have site certificates signed by several authorities. For example, site certificates should be signed by one (or two) of the current certificate authorities (Comodo, Verisign, etc) and by another key with a certificate published on the registrar level. Then everyone would obtain the second authority through the whois information (elliptic cryptography does not require the keys to be very long). This second certificate could be used to sign DNS records as well: DNSSEC is not widely used yet, and no one actually cares.

Yet, the web browsers seem to do their best to keep the web as insecure as possible by focusing on features that have little value added for security while remaining essentially insecure and still feature classes of bugs that we thought long gone.

It is high time we stopped blaming the Chinese or the Russians: our systems are broken and we must redesign them.